Prompt Engineering: The Ultimate Guide 2023 [GPT-3 & ChatGPT]

Prompt engineering is by far one of the highest-income skills that you can learn in 2023. Those equipped with it are capable of creating millions of dollars worth of value in just a few carefully crafted sentences.

In this prompt engineering guide, I will not only unpack this highly lucrative skill and take you step by step through learning it, but also share with you exactly how I have been using this skill to make money and build businesses.

And no, you don’t need any coding experience. This guide is intended for anyone looking to add this foundational skill of prompt engineering to their toolkit so they can access more opportunities with an AI.

- , AI

What is Prompt Engineering?

In plain English, prompting is the process of instructing an AI to do a task. We tell the AI, for example, GPT-3, a set of instructions, and it performs the task based on those instructions. Prompts can vary in complexity from a phrase to a question to multiple paragraphs worth of text.

I’m sure you’ve all played around with Chat GPT. The text that you provide in that dialog box is your prompt.

However, most of the value created through prompting is not done with Chat GPT. More on this later.

The reason prompt engineering, or more simply put, how you construct your prompts, is so important and so valuable is because of a concept called garbage in, garbage out.

Essentially, the quality of your input determines the quality of your output. When you have large language models like GPT-3 that are massive and are just a soup of data.

Your ability to write great prompts directly determines your ability to extract value from them.

Chat GPT vs OpenAI Playground

For this prompt engineering guide, we will be using the Open AI Playground for our prompting.

It is crucial to understand that the playground is not the same as Chat GPT. If you’re unfamiliar with the playground, it provides us with a flexible platform where we can interact with all of the Open AI suite of products in their natural state.

And by natural state, I mean the form that we get access to them through the Open AI APIs.

This is important to understand because anything that you can achieve within the playground can then be scaled and then productized and sold. More on that later.

If you didn’t know, ChatGPT is actually an application that Open AI has built on top of the GPT models that we’re going to be accessing through the playground.

The difference is that Open AI has significantly changed GPT-3 in order to make ChatGPT through reinforcement learning and fine-tuning and a bunch of other fun stuff.

Long story short, ChatCPT may be fun and valuable in its own right, but if you’re looking to create value and build a scalable business on top of these models, you need to be learning how to engineer the base models in their natural state.

This is because these base-level models are the only things that we can get access to through the APIs currently, and therefore, the only things that we can build businesses on top of.

Therefore, learning how to engineer prompts for the base models through the playground is going to be the focus of this guide.

OpenAI Playground Settings & How To Use It

Now, I’ll give you a rundown of the important OpenAI playground settings that you can play around with in the sidebar.

GPT Models

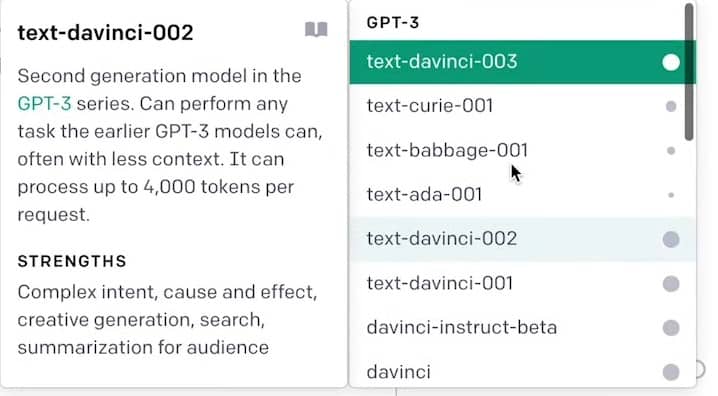

First and probably most importantly, you can change the model that you’re using to interact with.

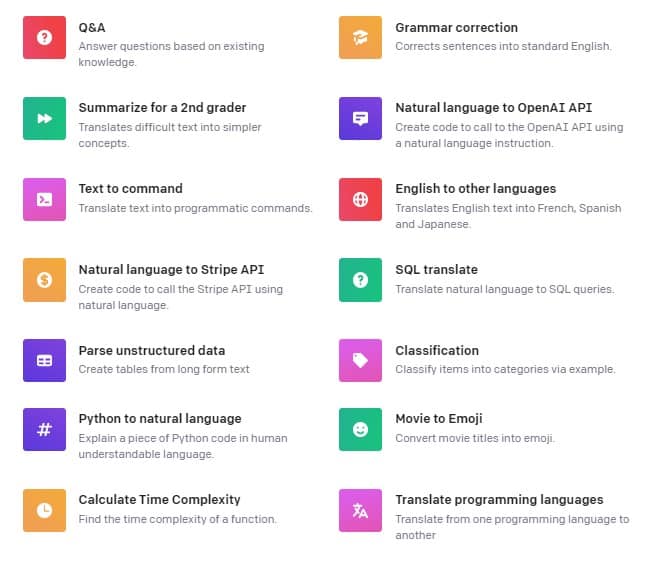

Open AI has a ton of different models for different purposes.

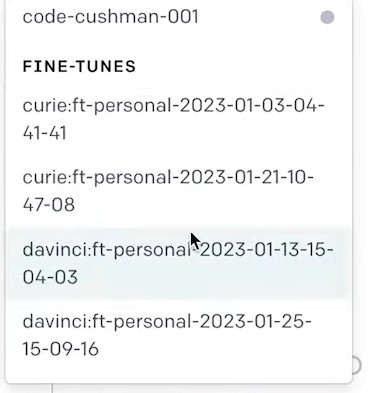

First, we have different versions of GPT-3 models like:

- Davinci

- Ada

- Curie

- Babbage

And all these different ones basically serve different functions.

It will tell you a little bit of a blurb about what each of these things does and what it’s good at.

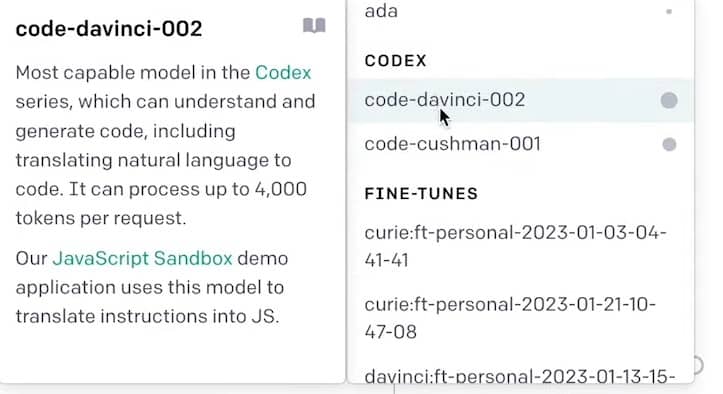

Then we have code-related models here which are more capable of understanding code.

And here I have some of my fine tunes from my personal account.

You may be thinking, why would I not just use the best one, which is Davinci 3 at the current time?

This is because the pricing for each of these models is actually different.

If you want to use these models for a very basic pattern recognition task, you shouldn’t be paying more and using the top model Da Vinci 3. You could be using Curie or Ada or something much lower that does what you need and nothing you don’t.

Temperature

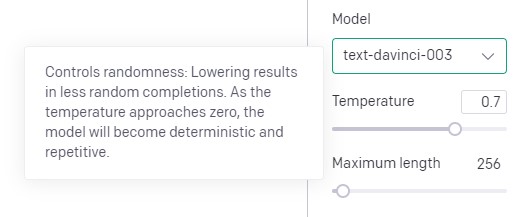

The next and second most important thing you can play around with is the temperature.

The temperature setting is crucial because it determines the randomness of your output.

Some tasks like creative writing or ideation would perform better if you increase the randomness. But in many cases, having the temperature at basically zero is going to be better if you want those rigid deterministic outputs.

Setting the temperature at zero can often be a very good way to ensure that you get the same results from essentially the same input.

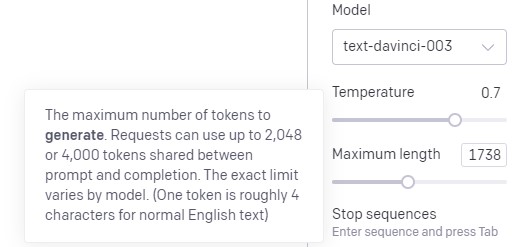

Maximum Length Setting

Next is the maximum length setting, which is an extremely important part of your prompt writing. These models have a strict limit on how much data you can pack into both the prompt and the response that you get back from the model.

This means that both the prompt that you write and the expected response cannot go over 4,000 tokens.

1 token is equal to roughly 4 characters in normal English text. The max length setting determines the length of the response that it will give back to you.

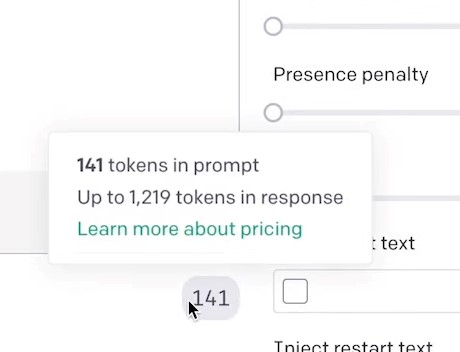

It’s important to do a little bit of quick math and see at the bottom of the screen here how many tokens you’ve taken up with your prompt and then essentially max your maximum length for the response to be not over 4,000 tokens in total.

Then here we have a few minor settings that you can play around with, such as the frequency penalty and the presence penalties.

In some cases, these are very useful because you might notice that it’s repeating the same thing over and over and you don’t want that. Or you want to talk about new topics more often, which will help you with the present penalty.

Now that’s out of the way, I can teach you your first method of prompt engineering, which is role prompting.

Role Prompting

In role prompting, if you couldn’t figure it out from the name, you are going to use a prompt in order to set the AI into a certain role. For example, in your prompt, you could include, you are a doctor, or you are a lawyer, and then start asking it legal or medical questions.

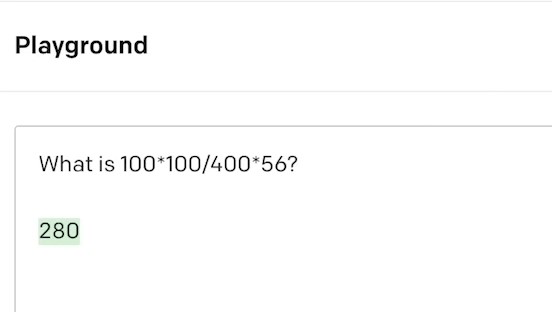

Here, we have a math problem to illustrate this role prompting.

Now, if I submit this equation, I get 280 as my result back, which is incorrect.

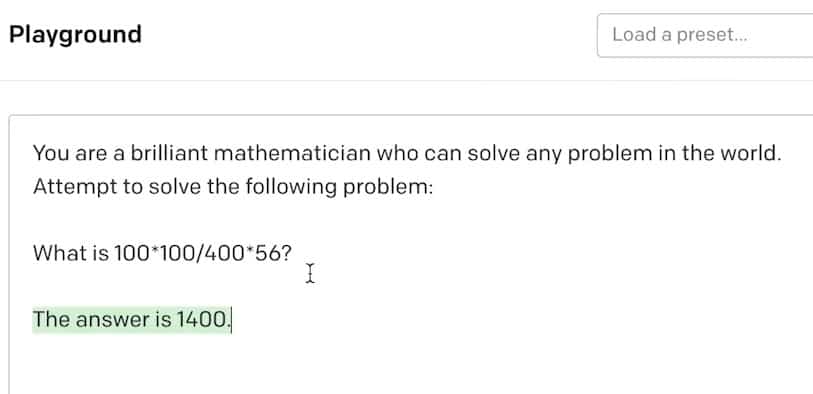

Now, if I go a few lines above it and add in this little Role Prompting section, suddenly the answer changes.

We get the answer of 1,400, which is actually the correct answer.

What we’ve done here is told it that it is a brilliant mathematician who can solve any problem in the world.

So this is setting it into the role of being a mathematician.

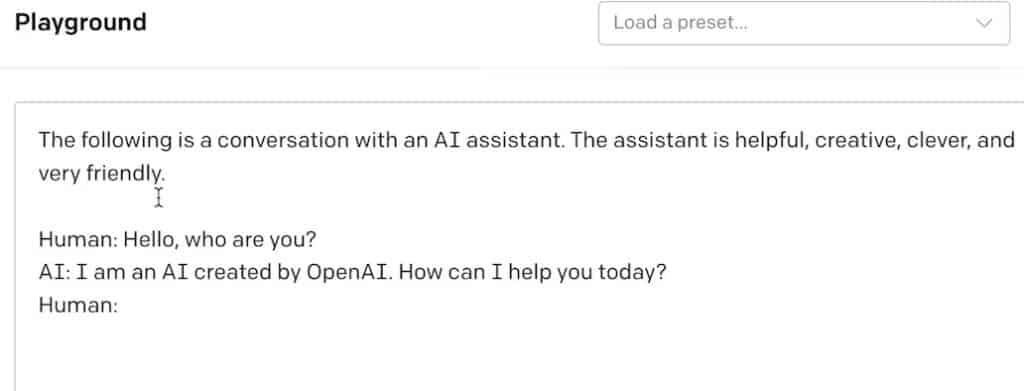

Or we can do what apps like ChatGPT have done and set the model into a personal assistant-friendly, helpful bot mode.

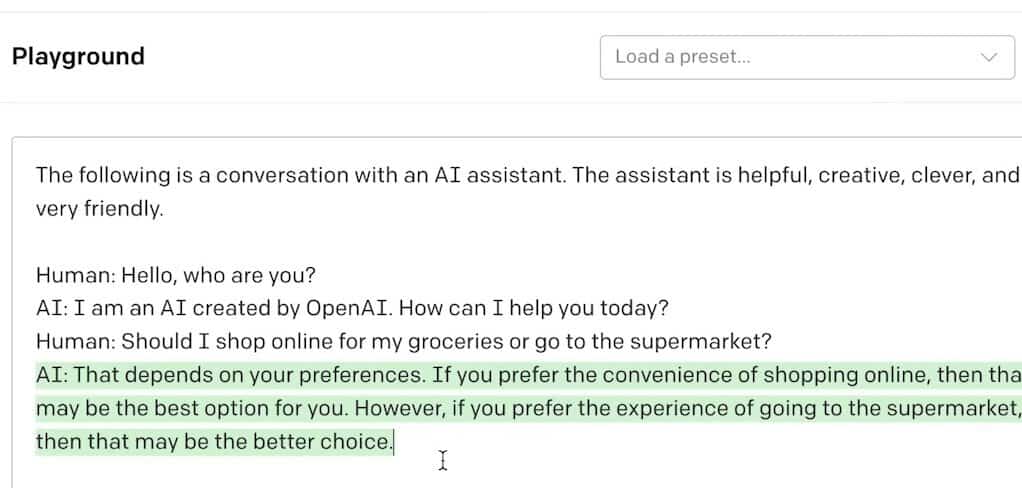

Here’s an example of a prompt that turns the model into a helpful AI assistant.

It’s a basic prompt that turns the model into a friendly AI assistant using adjectives like helpful, creative, clever, and very friendly to really set that mode as a helpful, friendly, clever assistant.

Now that it’s been set into this mode, I’m able to ask you the question, should I shop online for my groceries or go to the supermarket?

Just like that, we’ve got a chat GPT-like response, which is a friendly, helpful response to our question.

Setting modes as we’ve just done is one of the fundamental tools within your prompt engineering toolkit.

When assigning a role to an AI, we’re helping it by giving it more context. With this context, the AI is able to better understand the question.

Not surprisingly, with a better understanding of the question, the AI will give better answers.

You may have noticed in that last prompt, we’ve actually shown an example of one interaction between the human and the bot.

This brings us to our next method of shot prompting.

Shot Prompting

Shot prompting can be broken down into three categories:

- Zero shot prompting

- One shot

- Few shot prompting

Using these shot prompting methods is the easiest way I found to build businesses with AI right now.

Zero Shot Prompting

Zero shot prompting is essentially using the AI as an autocomplete engine. You’re simply giving it a question or a phrase and giving it free reign to reply to that without any expected structure.

Zero shot prompting is what we’ve been doing for most of this prompt engineering course already.

Simple stuff like, what is the capital of France? Paris.

Using zero shot prompting is essentially using these large language models as a massive autocomplete engine.

Going back to our previous example of the mathematician, role prompting, this is also zero shot. We haven’t provided any structure or expectations on how to answer it. The AI is just going to look at this and say, this is how I’m going to reply. It’s no expected structure.

We haven’t provided it with any structure on how we want this answer to be given back. This brings us on to one shot prompting.

One Shot Prompting

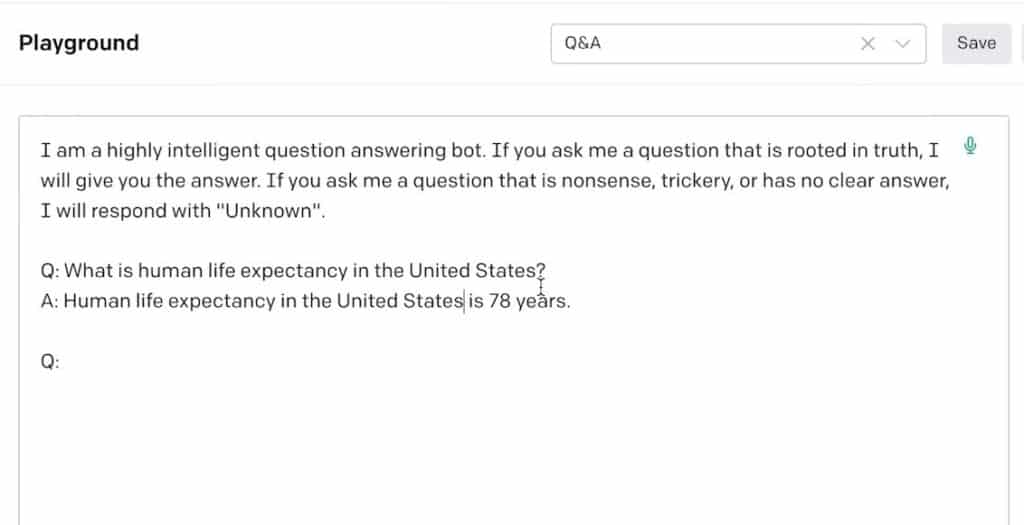

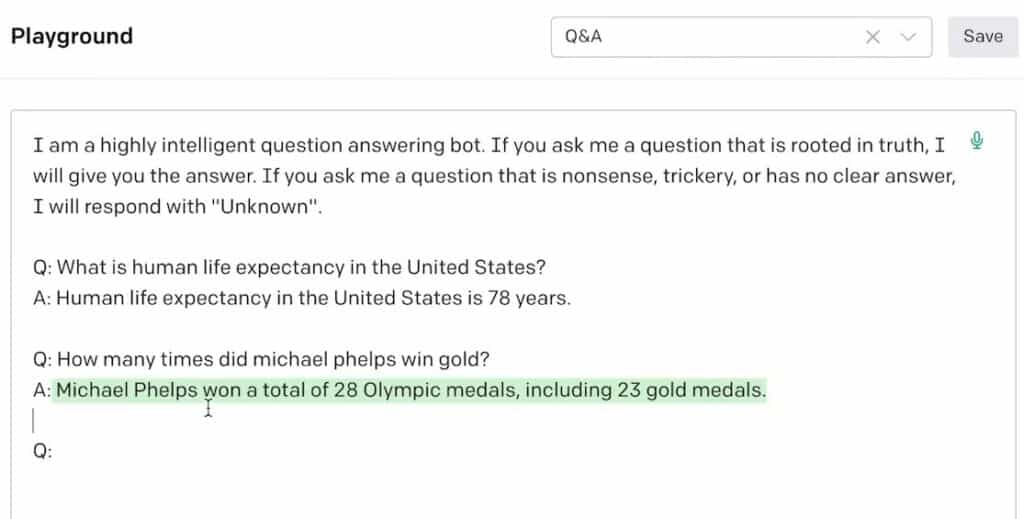

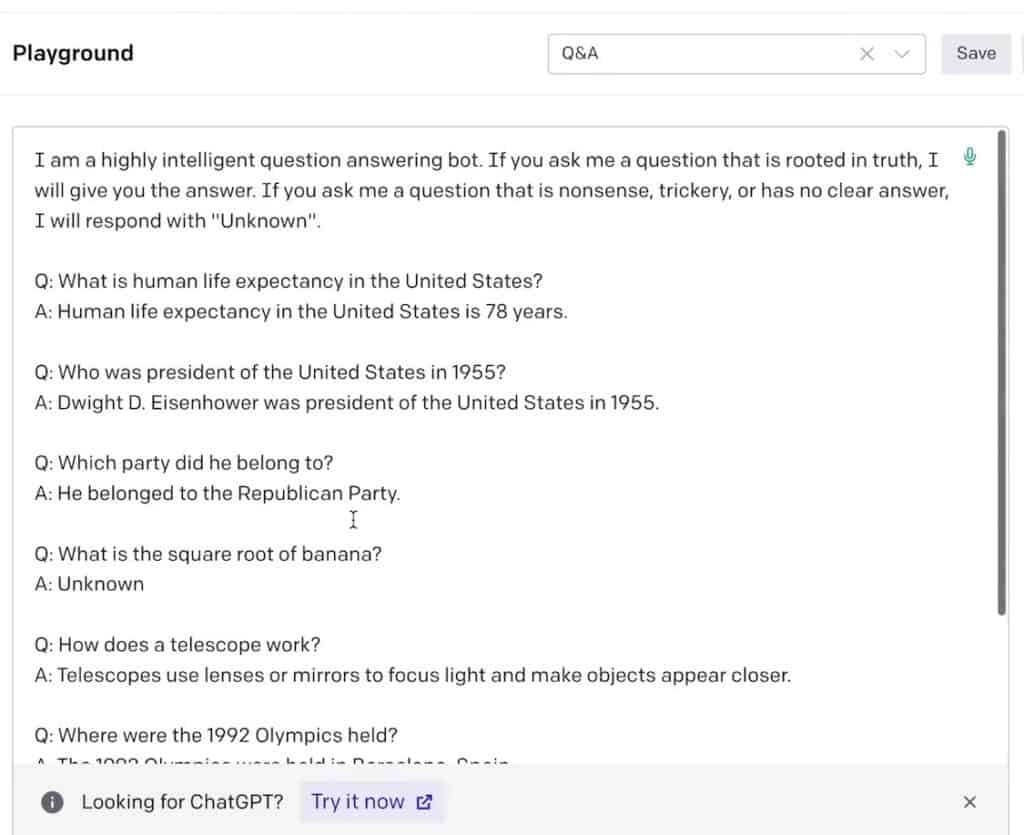

Here’s an example of one shot prompting mixed with a bit of role prompting as well.

We have a little bit of information to set it in a highly intelligent question-answering bot role. Below it, we have a one shot example of an interaction between the user and the AI.

So the question I’ll ask is “how many times did Michael Phelps win gold?”

Now when I enter my question within this, it’s not only going to take into account the role prompt above but also look at the structure and how it interacted with the one shot prompt above.

Here we have the answer, which is Michael Phelps won a total of 28 medals, including 23 gold.

These two pairs of answers and responses are very similar. It’s looked at the pattern and looked at the structure of the one above, and it’s answered it in the same way, matching the tone and length of it.

Few Shot Prompting

Few Shot Prompting is done by giving more than one example of how you want the AI to respond.

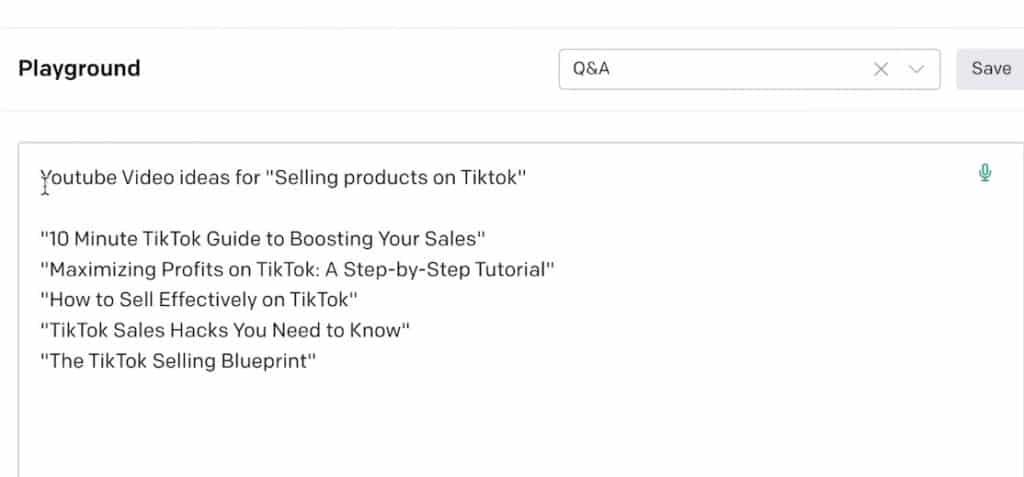

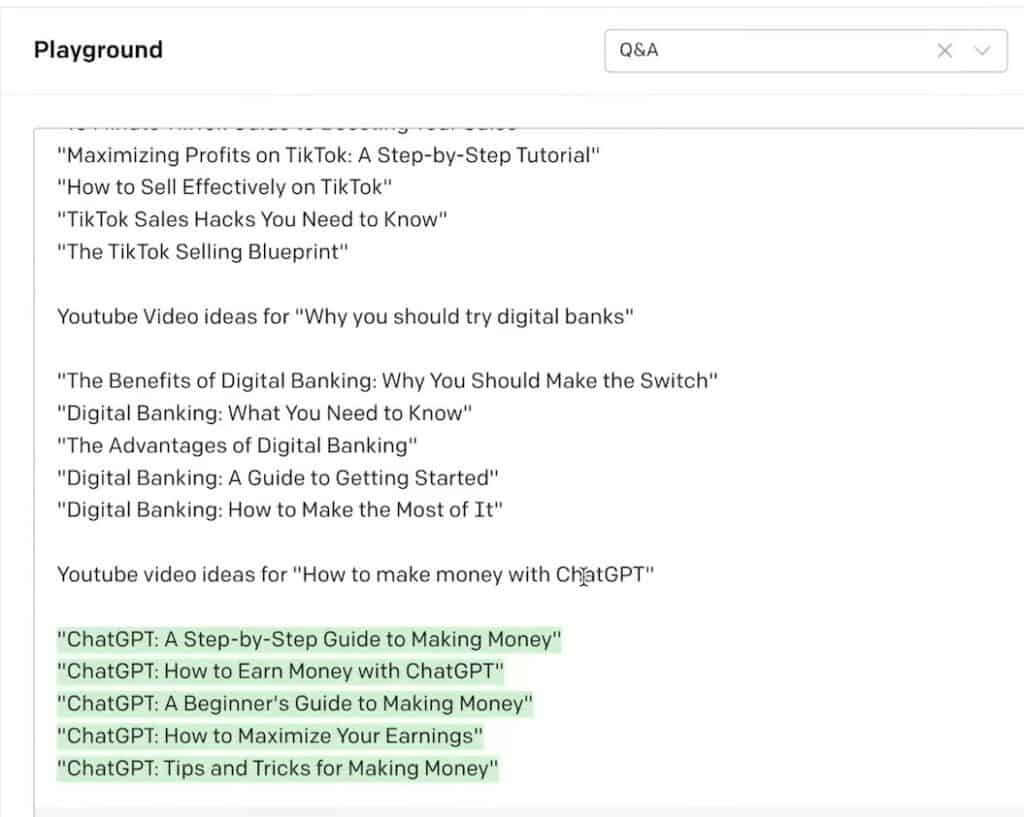

Here, I have a little prompt made up of a YouTube video idea generator.

What I’ve done is set up a question and answering pair.

The question – “YouTube video ideas for selling products on TikTok”.

Then I’ve given 5 examples that I just took from Chat GPT and put them in there.

💡 Pro Tip: This data here is really important. If you’re trying to use Few Shot to get a particular result, the things you’re putting in as examples matter a lot.

Now, if I add in the rest of the prompt, I have this next part, which is the second shot of the few shot prompt, “YouTube video ideas for why you should try digital banks”

I’ve given another 5 examples and all I need to do now is to paste in another question – “YouTube video ideas for how to make money with Chat GPT”.

And the AI is going to look at my previous shot prompts and then give me an answer based on the structure and content of those previous prompts.

And just like that, we’ve taken GPT-3 and turned it into a YouTube video idea generator, which is based on the styles that I like and titles which are provided here using the few shot method.

By adding more and more examples, you’re able to more precisely define the output that you want.

Crucial aspects of your response, like the tone, length, and structure, can all be determined by the examples that you provide.

Another example would be a Q&A bot.

When prompted with a question at the end, the AI is going to take a look at the role prompt at the top, get set into the role, and then look through all of these examples provided and go “okay, these are the kinds of responses that I’m expected to give.”

These are how long they are. This is the tone of voice. This is the structure. This is how you teach it to give you the results that you want.

If, for example, you were to take these answers and expand on them and make them a whole paragraph and did that for each and every question, then when you ask it a question, it’s going to give you back a full paragraph as well.

It’s important to understand why shop prompting works so well. This is because large language models are essentially just pattern recognition and generation machines.

Chain of Thought Prompting

Another handy tool to have in your prompt engineering toolkit in order to extract the most value out of these models is called chain of thought prompting.

Chain of thought prompting encourages the large language model to explain its reasoning as it goes through the steps, which typically results in better and more accurate results.

The increase in accuracy is particularly noted in:

- Arithmetic tasks

- Common sense tasks, and

- Symbolic reasoning tasks

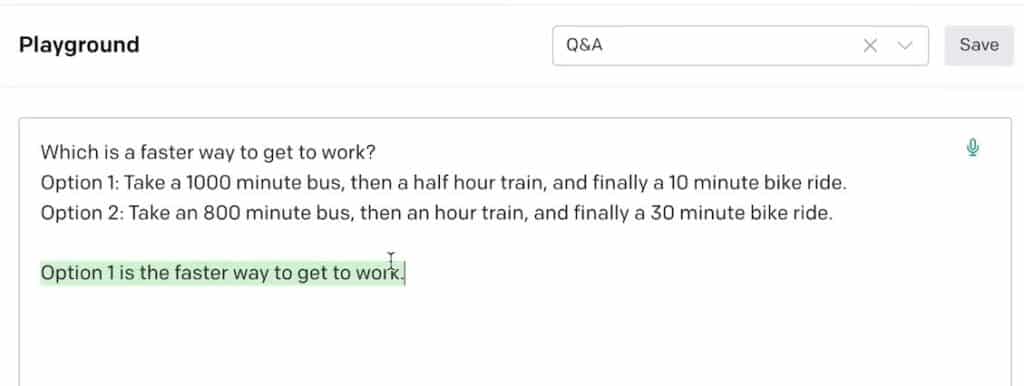

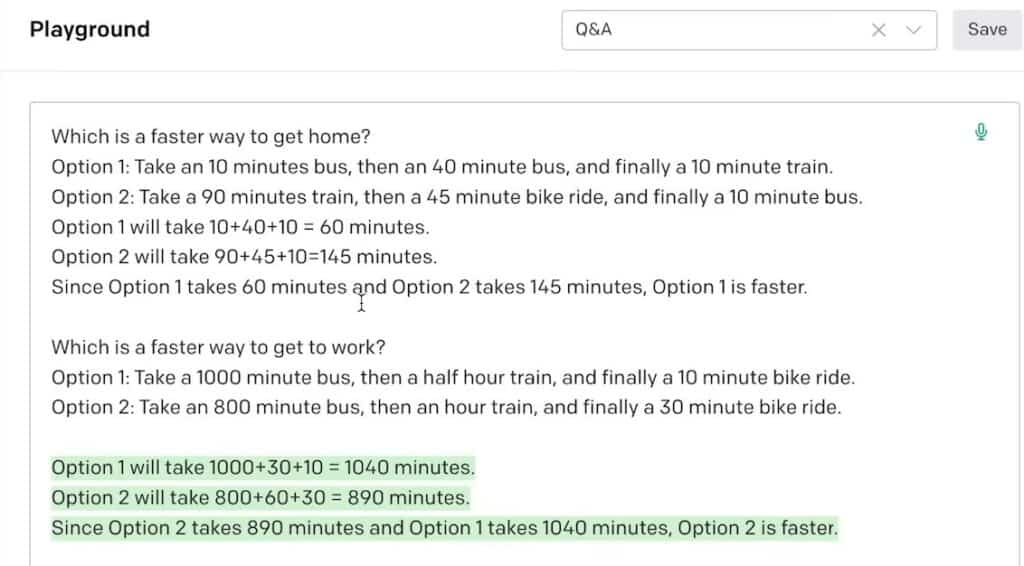

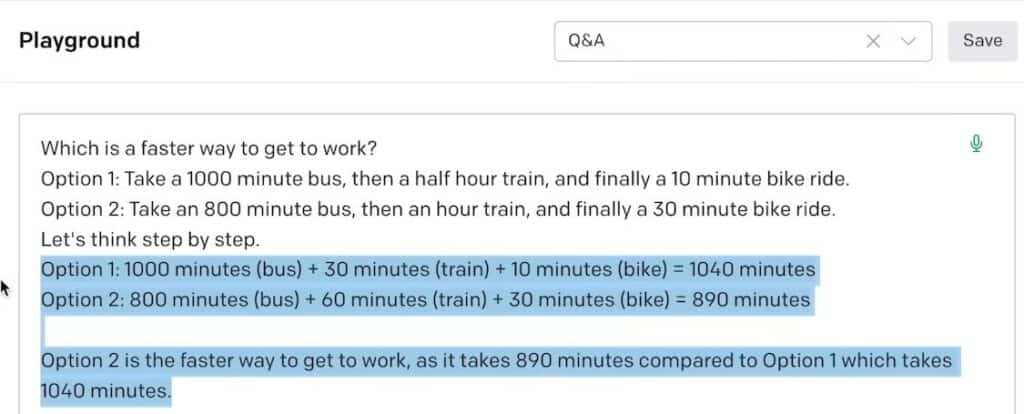

Here we have a word equation that’s asking what is the faster way to get to work.

The answer I get back is that option 1 is the faster way to work.

What we find out when we change the prompt is that that was actually the incorrect answer.

If we change the prompt around and make it explain the thinking, it actually comes out with a different answer, which is option 2.

Chain of thought prompting like this is really handy for these specific kinds of tasks. It’s a great thing to have in your toolkit as a prompt engineer.

Another method of doing a chain of thought prompting is actually called zero shot chain of thought prompting.

If we add this magic little phrase “let’s think step by step” to our zero shot prompt, we get a little bit of a different answer to what we got before.

Just like that, we’ve got the correct answer, which is option 2 by asking it to think step by step for us.

You may be thinking what’s the difference between a single shot and a Zero Shot Chain of Thought answer like this?

Well, it’s very easy in this situation to create a single shot or few shot prompt by thinking up a few examples and tweaking this question around a bit.

When the task is far more complex, sometimes getting multiple examples or even just a single example to use in a shot prompt is not possible.

Therefore, this little magic phrase of let’s think step by step can be the difference between extracting correct and incorrect answers with your prompts.

Now that you understand the basics, we can get into what you’re really here for, which is what are the biggest opportunities for prompt engineers in 2023 and beyond?

What Are The Biggest Opportunities for Prompt Engineers in 2023?

Experts like Dr. Alan D. Thompson have said that we have one to two years where prompt engineering will be extremely valuable but soon will be replaced by artificial intelligence that can write their own prompts.

So within this two year period, how can we get the most out of this highly lucrative skill?

Sell Your Services as a Prompt Engineer

First and most obvious is to sell your services as a prompt engineer. Demand for this skill is exploding right now. Give yourself a month or two to learn it and become an expert and then start going out and trying to find your own gigs.

Companies around the world are hiring for this right now, so all you need to do is learn the skill and get out there and start knocking on doors.

Create a Teaching Business out of Prompt Engineering

The second opportunity that I see is to create a teaching business out of prompt engineering. We are going to see companies all across the world have to pivot towards understanding and using these models.

One of the easiest ways for these companies to tap into this AI revolution that’s happening and start to use these tools to increase the productivity of their business and employees is to teach them how to use these tools.

If you can go into these companies and teach them skills like prompt engineering and give them a suite of tools that they can use to improve their productivity, then you’re going to have some seriously good opportunities to start making money by selling to these big companies.

Start an AI Business

Finally, my favorite way of making money with prompt engineering is by building businesses around one well-written prompt.

We are seeing extreme amounts of value being unlocked with just one well-written prompt.

An awesome example of this is Lita AI by Dr. Allen D. Thompson. If you haven’t looked into him already, I suggest you check him out.

But what he’s done is basically taken a GPT-3 model, written up a very specific prompt, and with that prompt, he’s basically created this AI assistant called Leta.

By writing such a quality prompt, he’s able to create an AI that has exactly the right character that he wants.

What he’s done is set up a webcam and he’s been interacting face to face talking to this AI and sharing it with the world.

While he’s monetized it yet and it’s more of a research project, it is insane seeing what just a few sentences of well-written text can do to transform these language models which are so powerful into these entirely new and powerful things in their own right.

This is an example of how you can use prompts and pull in different bits of data and pull in user input in order to create a little tool. If you took this and put it on a website and did a little bit of marketing, I’m sure you’d be able to get some money coming in off the back of one of these very basic tools.

I seriously think doing prompt engineering like this to alter these models is an even lower barrier to entry for people looking to build businesses with AI. All it takes is one carefully written prompt and maybe a little bit of user input matched together and you can create a valuable business in like half an hour.

Prompt Engineering Final Words

Now you know the basics of prompt engineering.

You can go out there and start practicing it more, start selling your services, start teaching people, or start building businesses based on writing prompts.

Patryk Miszczak